- 1 Introduction

- 2 Modeling Market Values

- 2.1 Feature Engineering

- 2.1.1 Base Model / Data Construction

- 2.2 Model Evaluation / Training

- 2.3 Predictions

Part 3

Eric Langowski

1 Introduction

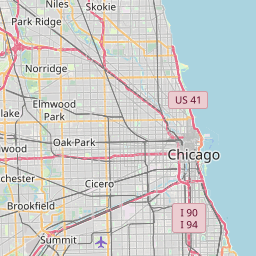

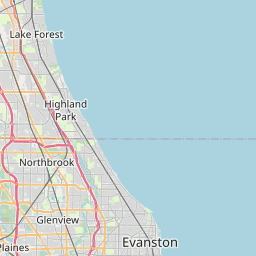

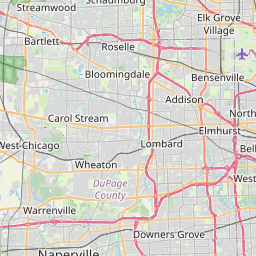

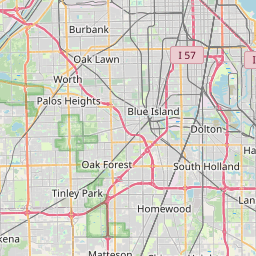

Cook County, Illinois includes almost 2 million parcels ranging from suburban mansions to thousand feet skyscrapers. The system is administered by a series of countywide agencies and it is responsible for fairly dividing property tax levies (the amounts that taxing bodies such as school districts levy against properties in their districts) among all these properties.

In the past, assessments in Cook County were highly regressive, where lower valued properties paid a significantly higher tax rate than higher valued properties. A study by the Center for Municipal Finance at the University of Chicago showed that from 2011 to 2015 that these regressive assessments lead to over $2 billion of property tax liability being paid by lower valued homes instead of higher valued ones.

This study, alongside a public reckoning, resulted in the election of a new assessor in 2018.

This analysis focuses on single family homes Additionally, using the

cmfproperty package, the IAAO arm’s length standard was

applied to the data. This will present a conservative picture of the

housing market in Cook County. Note that homes in Cook County as

supposed to be assessed at 10% of their fair market value. For clarity,

assessed values here are converted to market values.

The sales ratio is a key unit for analyzing the accuracy of assessments. It is calculated by taking the ratio of a property’s sale price to its assessment at the time of sale. The sales ratios can be evaluated using metrics from the International Association of Assessing Officers.

iaao <- cmfproperty::iaao_graphs(stats=stats, ratios=ratios, min_reporting_yr = min_yr, max_reporting_yr = max_yr, jurisdiction_name = 'Cook County')For 2023, the COD in Cook County was 18.99 which did not meet the IAAO standard for uniformity.

iaao[[2]]In 2023, the PRD in Cook County, was 1.009 which meets the IAAO standard for vertical equity.

iaao[[4]]In 2023, the PRB in Cook County was 0.004 which indicates that sales ratios increase by 0.4% when home values double. This meets the IAAO standard.

iaao[[6]]bs <- cmfproperty::binned_scatter(ratios = ratios, min_reporting_yr = min_yr, max_reporting_yr = max_yr, jurisdiction_name = 'Cook County')In 2023, the most expensive homes (the top decile) were assessed at 77.1% of their value and the least expensive homes (the bottom decile) were assessed at 91.8%. In other words, the least expensive homes were assessed at 1.19 times the rate applied to the most expensive homes. Across our sample from 2021 to 2023, the most expensive homes were assessed at 77.2% of their value and the least expensive homes were assessed at 82.6%, which is 1.07 times the rate applied to the most expensive homes.

bs[[2]]2 Modeling Market Values

We will now generate our own assessments for tax year 2023 for the City of Chicago. We have a base model from Part 2 which we will initially state and then expand upon. I will also demonstrate the xgboost (boosted decision tree) package. Boosted decision trees are similar to random forests except each tree is created iteratively in a process of continuous improvement.

Predictions will then be made for 2023 compared to the baseline of actual assessed values. Workflow is the same except that xgboost requires us to bake our data first.

Note that instead of joining assessments to sales we will join sales to assessments since we need to make a prediction for every property.

2.1 Feature Engineering

We will compare different models to see if engineering new features leads to any model improvement.

2.1.1 Base Model / Data Construction

Our base model will be very simple:

sp_log ~ char_yrblt + char_bldg_sf + char_land_sf + township_name- Model 1: Add time of sale adjustments and dow/month/year features

- Model 2: Model 1 + Add median sale price per square foot by neighborhood

- Model 3: Model 1 + Add census tract information

- Model 4: Model 1 + Add distance to nearest CTA (rail) stop

- Model 5: Model 1 + Model 2 + Model 4

nbhd_avg_chars <- ratios %>% select(pin, nbhd_code, SALE_PRICE) %>%

left_join(characteristics %>%

select(pin, char_yrblt, char_bldg_sf, char_land_sf) %>%

distinct(pin, .keep_all=TRUE),

join_by('pin')) %>%

filter(char_bldg_sf > 0, !is.na(char_bldg_sf)) %>%

group_by(nbhd_code) %>%

summarize(

mean_sale_sqft = mean(SALE_PRICE / char_bldg_sf, na.rm=T),

median_sale_sqft = median(SALE_PRICE / char_bldg_sf, na.rm=T))

joined_full <- assessments %>%

filter(township_code >= 70) %>% #chicago townships

mutate(assessed_value = if_else(tax_year == 2023, 10*certified_tot, 10*board_tot)) %>%

select(pin, tax_year, class, township_name, assessed_value) %>%

left_join(

sales %>%

mutate(year = year(ymd(sale_date)),

sale_date = as_date(sale_date)) %>%

filter(sale_filter_less_than_10k == 0,

sale_filter_deed_type == 0,

is_multisale == 0) %>%

distinct(pin, year, .keep_all=TRUE) %>%

select(pin, year, sale_price, sale_date, year),

join_by('pin', 'tax_year'=='year')

) %>%

left_join(characteristics %>%

select(pin, char_yrblt, char_bldg_sf, char_land_sf) %>%

distinct(pin, .keep_all=TRUE),

join_by('pin')) %>%

left_join(ratios %>%

select(pin, SALE_YEAR) %>%

mutate(arms_length = 1),

join_by('pin', 'tax_year'=='SALE_YEAR')) %>%

left_join(parcel_geo %>% select(pin, nbhd_code, GEOID, nearest_cta_stop) %>%

mutate(nbhd_code = as.character(nbhd_code),

nearest_cta_stop = as.double(nearest_cta_stop))) %>%

left_join(nbhd_avg_chars)## Joining with `by = join_by(pin)`

## Joining with `by = join_by(nbhd_code)`model_data <- joined_full %>%

filter(tax_year < 2023,

!is.na(char_yrblt),

!is.na(sale_price),

!is.na(GEOID),

arms_length == 1) %>%

mutate(sp_log = log(sale_price))

split <- rsample::initial_split(model_data)

train <- training(split)

test <- testing(split)

reg_model <- boost_tree(trees=200) %>%

set_mode('regression') %>%

set_engine('xgboost')base <- recipe(sp_log ~ char_yrblt + char_bldg_sf +

char_land_sf + township_name,

data=train) %>%

step_dummy(all_nominal_predictors()) %>%

step_corr(all_predictors()) %>% prep()

m_one <- recipe(sp_log ~ sale_date +

char_yrblt + char_bldg_sf +

char_land_sf + township_name,

data=train) %>%

step_date(sale_date, features = c("dow", "month", "year"), keep_original_cols = FALSE) %>%

step_dummy(all_nominal_predictors()) %>%

step_corr(all_predictors()) %>% prep()

m_two <- recipe(sp_log ~ sale_date +

char_yrblt + char_bldg_sf +

char_land_sf + township_name +

mean_sale_sqft,

data=train) %>%

step_date(sale_date, features = c("dow", "month", "year"), keep_original_cols = FALSE) %>%

step_dummy(all_nominal_predictors()) %>%

step_corr(all_predictors()) %>% prep()

m_three <- recipe(sp_log ~ sale_date +

char_yrblt + char_bldg_sf +

char_land_sf + township_name +

GEOID,

data=train) %>%

step_date(sale_date, features = c("dow", "month", "year"), keep_original_cols = FALSE) %>%

step_other(GEOID, threshold = 0.001) %>%

step_novel(all_nominal_predictors()) %>%

step_unknown(all_nominal_predictors()) %>%

step_dummy(all_nominal_predictors()) %>%

step_corr(all_predictors()) %>% prep()

m_four <- recipe(sp_log ~ sale_date +

char_yrblt + char_bldg_sf +

char_land_sf + township_name +

nearest_cta_stop,

data=train) %>%

step_date(sale_date, features = c("dow", "month", "year"), keep_original_cols = FALSE) %>%

step_dummy(all_nominal_predictors()) %>%

step_corr(all_predictors()) %>% prep()

m_five <- recipe(sp_log ~ sale_date +

char_yrblt + char_bldg_sf +

char_land_sf + township_name +

mean_sale_sqft + nearest_cta_stop,

data=train) %>%

step_date(sale_date, features = c("dow", "month", "year"), keep_original_cols = FALSE) %>%

step_dummy(all_nominal_predictors()) %>%

step_corr(all_predictors()) %>% prep()

our_workflows <-

workflow_set(

preproc = list(base = base, m1 = m_one, m2 = m_two, m3 = m_three, m4 = m_four, m5 = m_five),

models = list(xgb = reg_model)

)2.2 Model Evaluation / Training

We will take our training data and resample it 3-fold. Typically we would use more resamples but for computational simplicity 3 is fine. We then train our model across our resamples for our five candidate models.

train_resample <- rsample::vfold_cv(train, v = 3)

keep_pred <- control_resamples(save_pred = TRUE, save_workflow = TRUE)

models <-

our_workflows %>%

workflow_map("fit_resamples",

# Options to `workflow_map()`:

seed = 1101, verbose = TRUE,

# Options to `fit_resamples()`:

resamples = train_resample, control = keep_pred)## i 1 of 6 resampling: base_xgb## ✔ 1 of 6 resampling: base_xgb (5.1s)## i 2 of 6 resampling: m1_xgb## ✔ 2 of 6 resampling: m1_xgb (9.4s)## i 3 of 6 resampling: m2_xgb## ✔ 3 of 6 resampling: m2_xgb (9.4s)## i 4 of 6 resampling: m3_xgb## → A | warning: the standard deviation is zero, The correlation matrix has missing values. 8 columns were excluded from the

## filter.## There were issues with some computations A: x1## There were issues with some computations A: x2## There were issues with some computations A: x3

## There were issues with some computations A: x3## ## ℹ The workflow being saved contains a recipe, which is 39.36 Mb in ℹ memory. If

## this was not intentional, please set the control setting ℹ `save_workflow =

## FALSE`.## ✔ 4 of 6 resampling: m3_xgb (1m 8.2s)## i 5 of 6 resampling: m4_xgb## ✔ 5 of 6 resampling: m4_xgb (6.6s)## i 6 of 6 resampling: m5_xgb## ✔ 6 of 6 resampling: m5_xgb (6.9s)collect_metrics(models) %>%

select(wflow_id, .metric, mean) %>%

pivot_wider(names_from = .metric,

values_from = mean) %>%

make_table()autoplot(models, metric = "rmse") +

ggrepel::geom_text_repel(aes(label = wflow_id), nudge_x = 1/8, nudge_y = 1/100) +

theme(legend.position = "none")collect_metrics(models, summarize=FALSE) %>%

filter(.metric == "rmse") %>%

mutate(wflow_id = reorder(wflow_id, .estimate)) %>%

ggplot(aes(x = wflow_id, y = .estimate, group = id, color = id)) +

geom_line(alpha = .5, lwd = 1.25) We can see that all of our models offer some improvement. Model 5 is best.

model_fit_reg <- workflow() %>%

add_recipe(m_five) %>%

add_model(reg_model) %>%

fit(train)

our_preds <- model_fit_reg %>% augment(test)

multi_metric <- metric_set(mape, rmse, rsq)

multi_metric(our_preds, truth=sp_log, estimate=.pred) %>%

kableExtra::kable() %>%

kableExtra::kable_material(c("striped", "hover"))| .metric | .estimator | .estimate |

|---|---|---|

| mape | standard | 1.9307140 |

| rmse | standard | 0.3256295 |

| rsq | standard | 0.8170624 |

Actual assessments:

multi_metric(our_preds %>% mutate(av_log = log(assessed_value)), truth=sp_log, estimate=av_log) %>%

kableExtra::kable() %>%

kableExtra::kable_material(c("striped", "hover"))| .metric | .estimator | .estimate |

|---|---|---|

| mape | standard | 2.8445893 |

| rmse | standard | 0.4729154 |

| rsq | standard | 0.8294133 |

ratios_new <- cmfproperty::reformat_data(

our_preds %>% mutate(av_pred = exp(.pred)),

'sale_price',

'av_pred',

'tax_year'

)## [1] "Filtered out non-arm's length transactions"

## [1] "Inflation adjusted to 2021"bs <- cmfproperty::binned_scatter(ratios = ratios_new, min_reporting_yr = 2021, max_reporting_yr = 2022,

jurisdiction_name = 'Cook County')In 2022, the most expensive homes (the top decile) were assessed at 95.5% of their value and the least expensive homes (the bottom decile) were assessed at 124.9%. In other words, the least expensive homes were assessed at 1.31 times the rate applied to the most expensive homes. Across our sample from 2021 to 2022, the most expensive homes were assessed at 96.1% of their value and the least expensive homes were assessed at 121.6%, which is 1.27 times the rate applied to the most expensive homes.

bs[[2]]2.3 Predictions

We now fit/train our model on all the sale data and run predictions on all properties.

trained <- workflow() %>%

add_recipe(m_five) %>%

add_model(reg_model) %>% fit(model_data)

all_predictions <- trained %>% augment(joined_full %>% filter(tax_year == 2023) %>%

mutate(sale_date = ymd('2023-01-01')))

all_predictions %>%

select(pin, assessed_value, .pred) %>%

mutate(.pred = exp(.pred)) %>%

pivot_longer(!pin) %>%

ggplot(aes(x=value, color=name, fill=name)) +

geom_density(alpha=.3) +

scale_x_log10(labels=dollar) +

labs(x = 'Assessed Value', y='Density',

fill = 'Type', color='Type', title='Density of Predictions and AV')library(DALEXtra)## Loading required package: DALEX## Welcome to DALEX (version: 2.4.3).

## Find examples and detailed introduction at: http://ema.drwhy.ai/

## Additional features will be available after installation of: ggpubr.

## Use 'install_dependencies()' to get all suggested dependencies##

## Attaching package: 'DALEX'## The following object is masked from 'package:dplyr':

##

## explainexplain_reg <- explain_tidymodels(

trained,

data = train %>% select(-sp_log),

y = train$sp_log,

verbose=TRUE

)## Preparation of a new explainer is initiated

## -> model label : workflow ( default )

## -> data : 14722 rows 16 cols

## -> data : tibble converted into a data.frame

## -> target variable : 14722 values

## -> predict function : yhat.workflow will be used ( default )

## -> predicted values : No value for predict function target column. ( default )

## -> model_info : package tidymodels , ver. 1.1.1 , task regression ( default )

## -> predicted values : numerical, min = 9.823296 , mean = 12.63385 , max = 15.79478

## -> residual function : difference between y and yhat ( default )

## -> residuals : numerical, min = -1.406362 , mean = 0.0002936729 , max = 0.8248037

## A new explainer has been created!targ <- model_parts(explain_reg, loss_function = loss_root_mean_square)

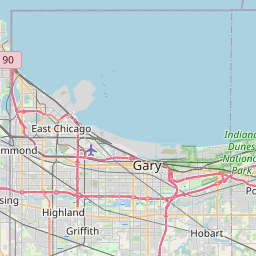

plot(targ)Now lets model our predictions across race and make a map.

cook <- get_acs(geography = "tract",

variables = c(white_alone = "B02001_002",

total_pop = "B02001_001",

medincome = "B19013_001"),

state=17, county=031,

output='wide') %>%

mutate(pct_nonwhite = (total_popE - white_aloneE) / total_popE) %>%

select(GEOID, pct_nonwhite, medincomeE)## Getting data from the 2017-2021 5-year ACSgeoid_avg <- all_predictions %>% left_join(

parcel_geo %>% select(pin, GEOID)) %>%

group_by(GEOID) %>%

summarize(

avg_prediction = mean(.pred, na.rm=T),

size = n()

) %>%

filter(!is.na(GEOID)) %>%

left_join(cook)## Joining with `by = join_by(pin, GEOID)`## Joining with `by = join_by(GEOID)`ggplot(geoid_avg, aes(x=exp(avg_prediction), y=pct_nonwhite)) +

geom_point(alpha=.3, size=3) +

scale_x_log10(labels=dollar) +

geom_smooth(se=FALSE) +

labs(x='Average Value Prediction',

y='Pct NonWhite Tract', title='Predicted Value by NonWhite Pop')## `geom_smooth()` using method = 'loess' and formula = 'y ~ x'library(leaflet)

geo_data <- chicago_tracts %>%

left_join(geoid_avg) %>%

mutate(avg_prediction = round(exp(avg_prediction), -2))## Joining with `by = join_by(GEOID)`label_str <- str_glue("<strong>Tract %s</strong><br> Avg Value %s<br>")

labels <- sprintf(label_str,

geo_data$GEOID,

dollar(geo_data$avg_prediction)) %>%

lapply(htmltools::HTML)

### make palette

pal1 <-

colorQuantile(

palette = "Oranges",

domain = geo_data$avg_prediction,

na.color = "Grey",

n=7

)

m <- leaflet() %>%

addTiles() %>% addPolygons(

data = geo_data,

fillColor = ~ pal1(avg_prediction),

weight = 0.5,

opacity = 0.5,

color = "white",

dashArray = 3,

fillOpacity = 0.7,

highlight = highlightOptions(

weight = 5,

color = "#666",

dashArray = "",

fillOpacity = 0.7,

bringToFront = TRUE

),

label = labels,

labelOptions = labelOptions(

style = list("font-weight" = "normal", padding = "3px 8px"),

textsize = "15px",

direction = "auto"

)

)

m